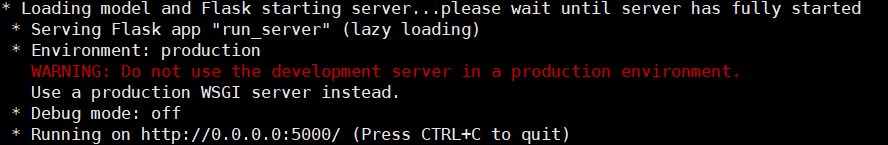

I saw the burning and the burning, and the burning of the gutter and I knew that I had all along been consumed. My imagination ran wild with visions of the wildest of the parched earth–of the burning and the red-hot Egypt–of the dreary, the ghastly moon–of the chilly and the mournful, and the unhallowed, and the pungent Egypt–of the burning and the fissure. The result was certainly Edgar Allan Poe-like, but it…well…read for yourself:ĭuring the whole of a dull, dark, and soundless day in the autumn of the year, when the clouds hung oppressively low in the heavens, I was again thrown headlong into a fire. I then fed in the first part of The Fall of the House of Usher when prompted for input text. To test out my model, I used another Hugging Face script, run_generation.py, and ran the following command: I fine-tuned GPoeT-2 on a Nvidia GeForce RTX 2060 for 5 epochs with a batch size of 2 and it took about 5 hours.

(See the docs for a complete list of possible arguments)Įven though fine-tuning is a lot faster than pre-training from scratch, transformers are hefty networks, so it’s still a computationally expensive process. For the same reason, I set the batch size to 2. I ran this parameter due to GPU memory contraints so that less words would be loaded into the model at a time. Line_by_line means that the model treats each line in the dataset as a separate example. eval_data_file = './data/poe_eval.txt' \

To fine-tune GPT-2 on my Poe dataset, I used the run_language_modeling.py script from the Transformers GitHub repository and ran the following command in the terminal:

#Finetune gpt2 install

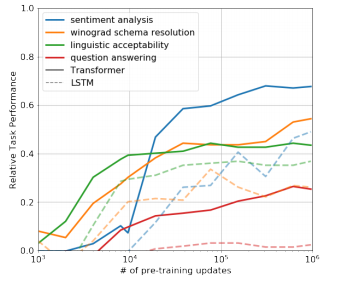

Then, you need to install the Transformers libaray To fine-tune GPT-2 using the Hugging Face Transformers library, you first need to have PyTorch or TensorFlow installed (I use PyTorch). Because the model’s parameters are already set, we don’t need to do as much training to fine-tune the model. This means we train the network on a new dataset or for a new task, but we use the pretrained model as our inital setting. Training a large network on this much data takes a long time and a lot of computational power, so we don’t want to retrain these networks from scratch. Powerful Transformer networks like GPT-2 are trained on a huge amount of data (GPT-2 was trained on text from 8 million webpages), and this is part of what makes them so powerful.

The training set is larger and used to acutally train the model, while the evaluation set is used to test how well the model performs on unseen data. I split his works into a training and an evaluation set, both of which contain stories and poems in their entirety.

#Finetune gpt2 download

Creating my datasetīecause all of Poe’s works are in the public domain, I could download everything he ever published from Project Gutenberg to create a dataset. I’ve dubbed my fine-tuned model GPoeT-2, and I used it to generate an eerie tale in time for Halloween.

#Finetune gpt2 generator

Why write a story when I can train a text generator to write one for me? I fine-tuned OpenAI’s GPT-2 model on the complete works of Edgar Allan Poe using the Hugging Face Transformers library. This year for Halloween, I decided to write a spooky story in the style of Poe. (I grew up near Baltimore, and boy did my teachers love a local talent.) I liked everything we read, but the one that stood out to me the most was The Pit and the Pendulum, a dark tale about a prisoner being tortured during the Spanish Inquisition. I love Halloween! And I love scary stories! In school, we read a lot of Edgar Allan Poe.

0 kommentar(er)

0 kommentar(er)